VSense

Photo by Changmin

Photo by Changmin

Summary

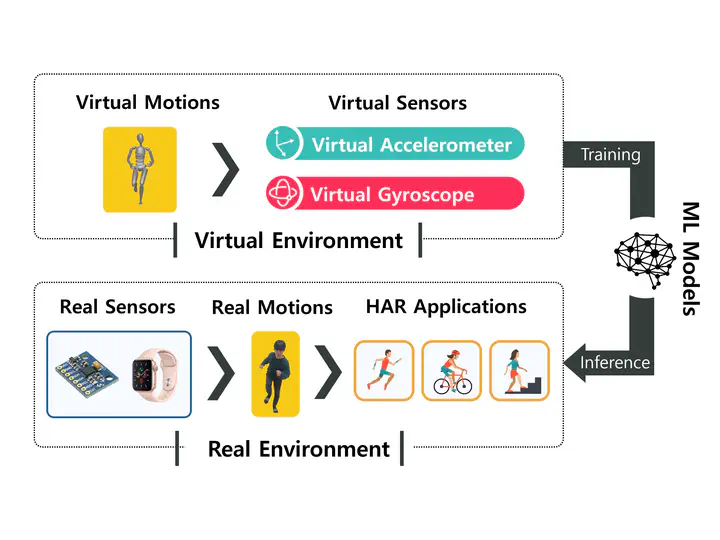

The data of sensors people have to wear is minimal compared to infrastructure-based sensing such as cameras and Wifi. To compensate for this limited sensor data, we propose a method to generate sensor data in a virtual world and perform activity recognition through it. We generate virtual sensor data through Unity, and by applying data augmentation techniques based on motion capture data, we can collect data from various environments. As a result, we improved the performance of Activity Recognition by supplementing the existing limited dataset.

My Role

I worked on this project as a co-lead. I was responsible for every step, including generating virtual sensor data, building an activity recognition pipeline, and collecting real-world IMU data.

Accomplishments:

- Experienced materializing, implementing, and validating ideas through thought and experimentation.

- Implemented virtual motion synthesis with Unity.

- Implemented virtual sensor data generation with Unity and Python

- Implemented activity recognition pipeline with Python using scikit-learn and TensorFlow

- Real-world IMU data collection with IMU sensor and Arduino board

- Design and conduct experiments and analyze results